Complex

Boids#

This sample demonstrates the simulation and control of flocking behavior (Boids algorithm) for different types of entities, such as birds and fish. Two separate controllers manage the behavior of these groups using common flocking principles like cohesion, alignment, and separation.

You can adjust various parameters in real time to observe how they influence the behavior of each flock:

- Cohesion - strength of attraction toward the center of the flock.

- Spot Radius - distance within which other units are considered for cohesion and alignment.

- Alignment - force that aligns a unit's direction with the average heading of its neighbors.

- Separation - repulsion force that prevents units from crowding too closely.

- Separation Desired Range - distance threshold for applying separation force.

- Target - amount of force directed towards target.

- Unit Max Speed - maximum unit speed.

- Unit Max Force - maximum steering force applied for movement corrections.

- Unit Max Turn Speed - how quickly a unit can rotate to adjust direction.

Two independent flocks (e.g., fish and birds) demonstrate how multiple controllers can operate simultaneously with distinct settings. Optional debug visualization renders bounding boxes around each flock, helping observe bounds and transitions.

Use Cases

- Games and simulations - realistic swarm behavior for birds, fish, insects, crowds, or drones.

- Training AI systems - testing multi-agent behavior and interaction dynamics.

- Environmental storytelling - adding life to ecosystems and natural environments.

- Cinematic scenes - choreographed group movements for visual impact.

- Education and research - exploring emergent behavior in decentralized systems.

SDK Path: <SDK_INSTALLATION>source/complex/boids

Crane Rope#

Rope physics can be extremely difficult. The sample features a simple and elegant way of creating a winch or hoist using a combination of dynamically added JointBall and geometry.

You can rotate the crane, adjust the rope length, change the mass of the attached load, and detach the load to observe the resulting motion. Enabling the Visualizer via checkbox in the sample's UI allows you to see how the position of the joint ball changes in response to these parameter adjustments. The rope also includes an optional tension compensation feature, which helps maintain rope stability and realism under varying loads.

This type of winch is suitable for simulation of helicopter operations or heavy duty equipment towing. The rope is implemented as a C++ Component that you can use in your project.

SDK Path: <SDK_INSTALLATION>source/complex/crane_rope

Day-Night Switching#

This sample showcases a dynamic day-night cycle driven by the rotation of a World Light source (sun), animated according to a simulated global time. The sun's position is updated continuously or in response to manual time input. The time progression speed can be adjusted using the Timescale slider.

The sun's orientation influences both the overall scene lighting and object-specific responses, enabling or disabling nodes and adjusting the emission states of designated materials depending on whether it's currently day or night. Additional props, such as Projected Light node and door closed/open signage, are toggled to reflect the time of day. Red and blue helper vectors visualize the zenith direction and the sun's current orientation, respectively.

Two control modes are available:

- Zenith Angle: Uses the angle between the sun's direction and the zenith (up vector). If the angle is below a threshold, it is considered daytime.

- Time-Based: Defines day/night using configurable Morning and Evening hour boundaries sliders.

Use Cases:

Ideal for games, simulations, or architectural walkthroughs that need consistent lighting transitions and dynamic environmental response.

SDK Path: <SDK_INSTALLATION>source/complex/day_night_switch

Fire Hose#

This sample demonstrates how to implement a fire hose system using decals and particle collisions. Foam particles are emitted from a hose, interact with the environment, and leave visual marks on surfaces. When aimed at fire sources, the foam gradually extinguishes them over time.

Foam decals accumulate and persist for a limited duration, fading and expanding gradually to simulate realistic dispersion. The emitter can either remain fixed in one direction or sweep smoothly back and forth, mimicking a real hose in motion.

This approach can be used to simulate fire suppression in interactive gameplay, emergency response training, or realistic firefighting visualizations.

SDK Path: <SDK_INSTALLATION>source/complex/fire_hose

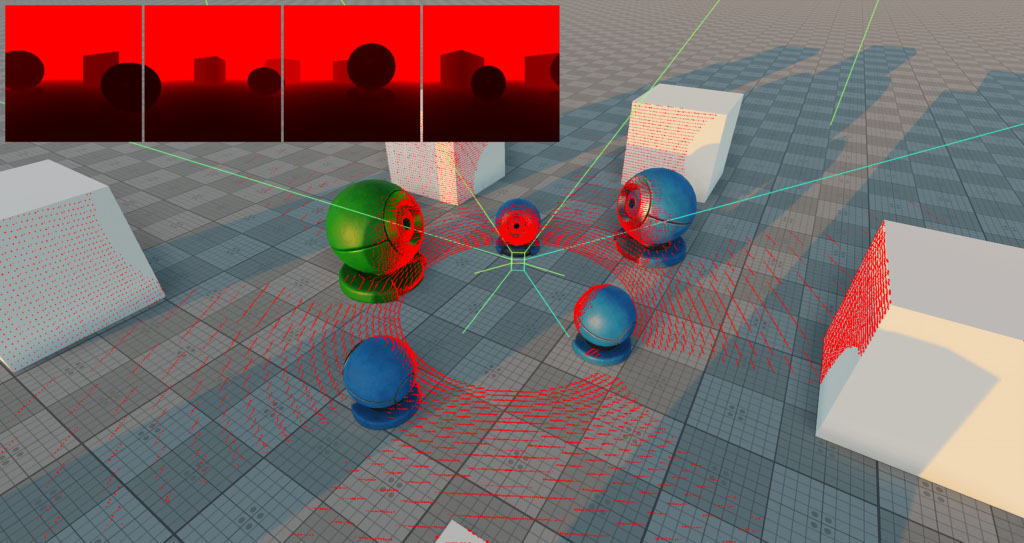

LiDAR#

This sample demonstrates a realistic LiDAR sensor simulation, used in self-driving cars, robotics, robot vacuum cleaners, and drones to map their surroundings. It works by combining four (or optionally more) virtual depth cameras to create a full 360-degree scan. The LiDAR emits rays and measures distances by rendering a depth map of the environment. In addition, a response intensity for each ray is calculated using normal, roughness and metalness from the G-buffer. You can tweak its settings (scan range, FOV, resolution, etc.) via API.

Key Features:

- Emulated LiDAR using 4 or more rendered views

- Configurable min/max range, FOV, beam resolution (number of stacks and slices)

- Asynchronous data transfer using asyncTransferTextureToImage

- Dynamic beam caching, image post-processing, and world-space point rendering

- Optional visual debugging: depth maps, scan points, and frustums

- Auto-refreshing system with internal transform and scan updates.

Use Cases:

- Autonomous vehicle simulation (robot vacuums, drones, cars)

- Autopilot and AI training using virtual LiDAR input

- Robot navigation and localization (SLAM, path planning).

SDK Path: <SDK_INSTALLATION>source/complex/lidar

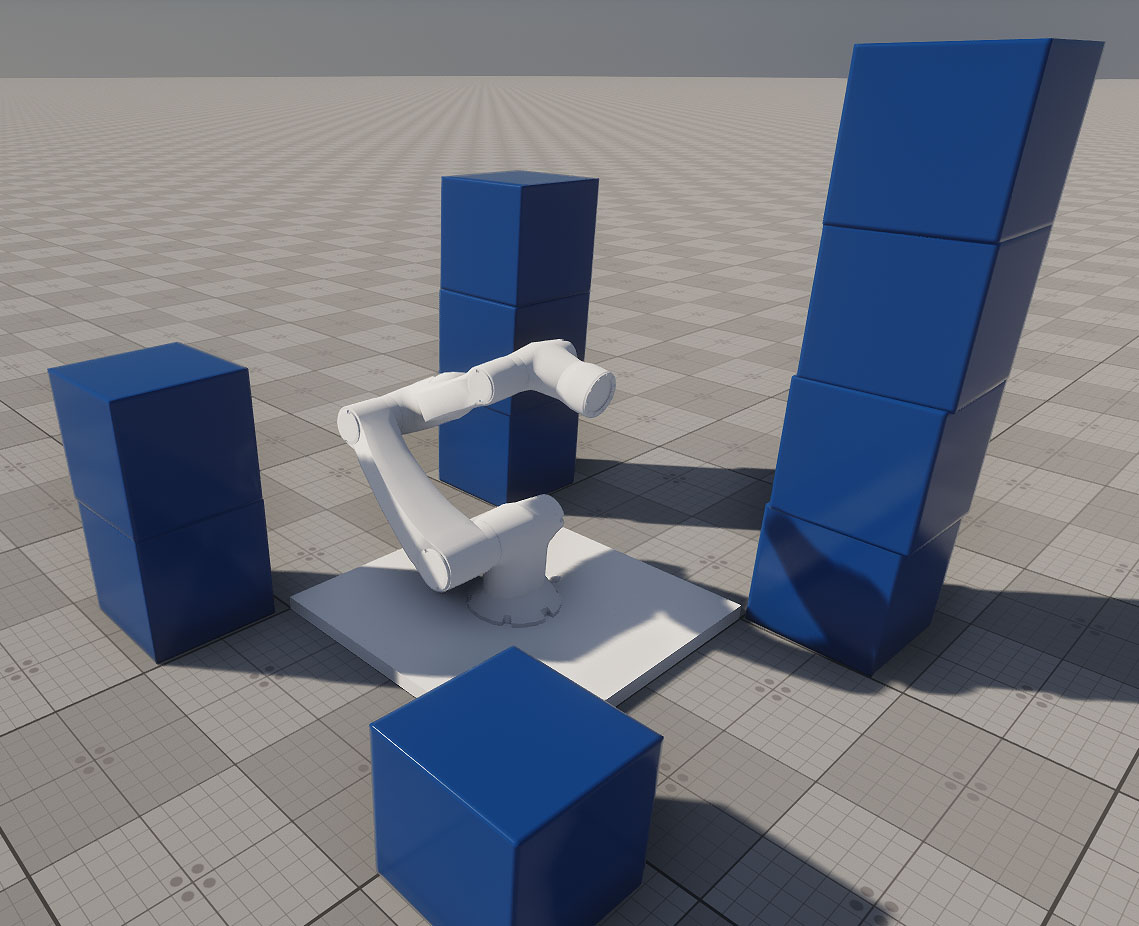

Robot Arm#

This sample demonstrates how to build a physics-based robotic arm with a kinematic chain composed of six links: one fixed and five movable. Each movable link is connected via a hinge joint (JointHinge) and driven by a motor that responds to keyboard inputs.

The arm's end effector is a magnetic gripper capable of grabbing, holding, and releasing dynamic objects in its environment. The gripper and each joint can be controlled independently via key bindings, which are configurable and demonstrated in the Controls section.

This setup provides a flexible starting point for creating custom robotic arms with any required number of degrees of freedom (DoF). You can replace manual input with a control system (e.g., inverse kinematics, AI, joystick, or ROS integration) to adapt the robot arm to your specific use case.

Use Cases:

- Simulation & prototyping of industrial robotic manipulators.

- Educational environments to teach principles of robotics, joint control, or physics-based animation.

- AI training for robotic arms using reinforcement learning or motion planning.

- Human-machine interfaces: test robotic interaction with dynamic environments.

- Virtual reality robotics simulations or operator training.

SDK Path: <SDK_INSTALLATION>source/complex/robot_arm

First-Person Controller#

This sample demonstrates how to implement and customize the first-person controller with a physical body.

SDK Path: <SDK_INSTALLATION>source/complex/first_person_controller

Observer Controller#

This sample replicates the free camera used in the UnigineEditor. The camera offers the following key features:

- Fly-Through Mode Freely move the camera in all directions using keyboard and mouse controls.

- Focus on Objects Center the camera on any selected object and adjust distance automatically.

- Zoom & Pan Zoom in and out, and pan while preserving view direction.

- Speed Control Menu Switch between predefined movement speeds (1-3) or adjust custom speed values.

- Position Management Set or teleport the camera to specific world coordinates through the menu.

SDK Path: <SDK_INSTALLATION>source/complex/observer_controller

Player Persecutor#

This sample demonstrates a custom third-person camera built using a PlayerDummy node, replicating and extending the behavior of UNIGINE's built-in PlayerPersecutor object.

The component calculates camera position and orientation based on user input (if enabled), target movement, and optional collision detection. The anchor point defines the offset relative to the target, while minimum and maximum angles and distances constrain camera movement. If collision is enabled, the camera uses a collision shape to detect and avoid geometry between itself and the target, adjusting its position accordingly. The camera supports both free and fixed rotation modes. When rotation is fixed, the camera maintains a stable angle relative to the target. Otherwise, it can rotate in response to mouse input.

This setup is useful for third-person gameplay, chase cameras, or situations where built-in logic isn't flexible enough and a fully customizable solution is needed.

SDK Path: <SDK_INSTALLATION>source/complex/player_persecutor

Spectator Controller#

This sample implements a first-person Spectator camera controller with configurable movement and physical collision detection using the Sphere collision shape, assigned to respond to collisions with geometry (e.g. with terrain) in the world.

Movement parameters such as speed, sprint acceleration, turning rate, and mouse sensitivity can be adjusted in real-time using the sliders in the Parameters section.

Use Cases:

- Building custom camera tools with physical awareness for scene editing or testing purposes.

- First-person 3D scenes navigation and exploring while preserving configurable interaction with the geometry.

SDK Path: <SDK_INSTALLATION>source/complex/spectator_controller

Top-Down Controller#

This sample represents an implementation of some elements of a top-down strategy such as selecting one or multiple units, panning the view, turning the camera and smoothly focusing it on the current selection. The implementation is based on the PlayerDummy node.

SDK Path: <SDK_INSTALLATION>source/complex/top_down_controller

Non-Physical Tracks#

This sample demonstrates how to imitate the vehicle tracks using non-physical (i.e. without body assigned) track plates, wheels, and joints (JointWheel). It illustrates how non-physical tracks interact with various obstacles.

SDK Path: <SDK_INSTALLATION>source/complex/track_non_physical

Physical Tracks#

This sample demonstrates how to imitate the vehicle tracks using physical (i.e. having body and shape) track plates, wheels, and hinge joints (JointHinge). It illustrates how physical tracks behave on interacting with various obstacles and when rotating depending on the vehicle speed.

SDK Path: <SDK_INSTALLATION>source/complex/track_physical

The information on this page is valid for UNIGINE 2.20 SDK.