Search the Community

Showing results for tags 'shader'.

-

Было очень сложно разбираться в том, как написать простой шейдер (вершинный + фрагментный) на UUSL, в базовом материале и как туда передавать данные из C++. К примеру я очень долго искал, как передать матрицу в шейдер. Я сначала нашёл про Material::setParameter но там не было матриц. Затем нашёл Shader::setParameter, но в шейдере матрица всё равно не задавалась. Только потом, я каким-то образом (уже не помню каким) узнал, что в шейдере эту самую матрицу нужно указать внутри `CBUFFER(parameters)`. Очень сильно не хватает документации и примеров, соединяющих всё воедино. Потому что пока что ориентиром является только имплементация ImGui, в которой покрываются далеко не все аспекты.

- 1 reply

-

- documentation

- feedback

-

(and 3 more)

Tagged with:

-

I want to know what is the purpose of this Glass refraction texture. which is connected to shader. How is it created. and why does it not look like a normal shader texture of unigine and looks more like alpha or ambient occlusion Map. Please let me know which software was used to create this texture map and how to recreate it, if that information is available to be disclosed.

-

Transform Feedback Shader to change MeshObjects

sebastian.vesenmayer posted a topic in Content Creation

We would like to bake different transformation states of multiple ObjectMeshStatic into one to reduce single object count. The Mesh that would be created by transform feedback will only change when one transformation state in the original Node Tree will change. Is it possible to change another MeshObject by a transform feedback shader in Unigine or do we need to do this on cpu side? -

Hi, we are developing a border detection/outline shader that renders the border of a mesh over any other objects in the scene. Some other post ask for similar effects but none came up with a final solution: https://developer.unigine.com/forum/topic/3432-object-outline-shader/?hl=%2Bdepth+%2Btest#entry18421 https://developer.unigine.com/forum/topic/2899-depth-testing-in-post-shader-precision/?hl=%2Bdepth+%2Btest#entry15842 https://developer.unigine.com/forum/topic/917-depth-test/?hl=%2Bdepth+%2Btest#entry4545 Sample post_selection_00 is the kind of effect that we need to achieve but rendering the border over all other objects (like disabling depth buffer). But I can't figure out how to achieve depth test disabling effect Here is a sample image of what we want to achieve: Any suggestions? Thanks in advance.

-

Hello, I just installed Unigine on Linux and followed this tutorial step by step to create a custom material with a deferred pass shader. I don't get any compilation errors. I assigned the custom_mesh_material to a cube and it is not rendering (as in the sceenshot bellow): Is there something I have missed? Thanks in advance

-

Hello, is there any uniform which can be accessed to get supersampling scaling value in the geometry shader? Did not find it in the documentation. Thanks

-

[SOLVED] Changing and replacing indices during runtime in ObjectDynamic

sebastian.vesenmayer posted a topic in C++ Programming

Hello together, I try to change the indices of an ObjectDynamic during runtime to discard some points in Unigine 2.11. I can call clearIndices() on this object and all geometry vanish. But when I change the index array and set it again. the changed data won't get uploaded and the geometry still looks the same. Any ideas? int AppWorldLogic::init() { // Write here code to be called on world initialization: initialize resources for your world scene during the world start. m_dynamicObject = Unigine::ObjectDynamic::create(); m_dynamicObject->setMaterialNodeType(Unigine::Node::TYPE::OBJECT_MESH_STATIC); m_dynamicObject->setWorldTransform(Unigine::Math::translate(Unigine::Math::Vec3(0.0, -4.0, 1.0))); m_dynamicObject->setMaterial("custom_forward_material","*"); const Unigine::ObjectDynamic::Attribute attributes[]={{ 0, Unigine::ObjectDynamic::TYPE_FLOAT, 3 }}; m_dynamicObject->setVertexFormat(attributes, 1); m_dynamicObject->setSurfaceMode(Unigine::ObjectDynamic::MODE::MODE_POINTS, 0); m_dynamicObject->setBoundBox(Unigine::BoundBox(Unigine::Math::vec3(-10000.f, -10000.f, -10000.f), Unigine::Math::vec3(10000.f, 10000.f, 10000.f))); Unigine::Math::vec3 position; for (int i = 0; i < 1000; ++i) { for (int j = 0; j < 100; ++j) { position = Unigine::Math::vec3(i, j, 1.f); m_dynamicObject->addVertexFloat(0, position, 3); } } m_indices = new int[100000]; for (int index = 0; index < 100000; ++index) { m_indices[index] = index; } m_dynamicObject->setIndicesArray(m_indices, 100000); return 1; } //////////////////////////////////////////////////////////////////////////////// // start of the main loop //////////////////////////////////////////////////////////////////////////////// int AppWorldLogic::update() { static int counter=1; if (counter % 120 == 0) { m_dynamicObject->clearIndices(); } else if(counter % 120 == 60) { for (int i = 0; i < 100000; ++i) { m_indices[i] = (rand() % 2 == 1) ? i : 0; } m_dynamicObject->setIndicesArray(m_indices, 100000); } counter++; return 1; } -

c++ [SOLVED] About GEOM_TYPE_IN on shaders how I can IN a vertex Point.

roberto.voxelfarm posted a topic in Rendering

I try to recreate a shader like the sample on https://learnopengl.com/Advanced-OpenGL/Geometry-Shader on the sample is use a vertex point as geometry in. Using UUSL the GEOM_TYPE_IN. How I can put a POINT or a Vertex?, for now my two valid options are GEOM_TYPE_IN(LINE_IN) or GEOM_TYPE_IN(TRIANGLE_IN) I try parameters like VERTEX_IN, POINT_IN etc but all of then give me "error X3000: unrecognized identifier" VERTEX_IN or POINT_IN In fact if you do a search on all your documentation for GEOM_TYPE_IN shader definition don't appear nothing. The only reference to the LINI_IN or TRIANGLE_IN appear on the https://developer.unigine.com/en/docs/2.9/code/uusl/semantics?rlang=cpp under the "Geometry Shader Semantics#" section. This is the shader sample from the web site, were for each vertex IN emit 5 vertex OUT as triangle strip #version 330 core layout (points) in; layout (triangle_strip, max_vertices = 5) out; void build_house(vec4 position) { gl_Position = position + vec4(-0.2, -0.2, 0.0, 0.0); // 1:bottom-left EmitVertex(); gl_Position = position + vec4( 0.2, -0.2, 0.0, 0.0); // 2:bottom-right EmitVertex(); gl_Position = position + vec4(-0.2, 0.2, 0.0, 0.0); // 3:top-left EmitVertex(); gl_Position = position + vec4( 0.2, 0.2, 0.0, 0.0); // 4:top-right EmitVertex(); gl_Position = position + vec4( 0.0, 0.4, 0.0, 0.0); // 5:top EmitVertex(); EndPrimitive(); } void main() { build_house(gl_in[0].gl_Position); } /roberto -

I been try to do a point cloud using "deferred" pass material, and I'm been testing differents option. For sample this sample if I put a material as "particles" work fine, I found a particle implementation shaders with the shader for "geometry, vertex and fragment" on ambient and don't work, I try many samples I found on the forums and don't work on my side. I try to modify that I been testing and I don't get nothing yet. The only shader I get running was using a UUSL for other a "object_mesh_static" node, but nothing yet for a "object_dynamic". In the HELP documentation only I found two reference to a UUSL geometry shader only as "https://developer.unigine.com/en/docs/2.9/code/uusl/types?rlang=cpp", and a table "https://developer.unigine.com/en/docs/2.9/code/uusl/semantics?rlang=cpp" nothing about a working sample shader. Any help on this way I will appreciate very much. void AppWorldLogic::InitObjectDynamic03() { ObjectDynamicPtr pod; pod = ObjectDynamic::create(); pod->setName("POINTS"); //pod->setMaterial("particles", "*"); // this work pod->setMaterial("MyParticles", "*"); pod->setSurfaceProperty("surface_base", "*"); pod->setSurfaceMode(ObjectDynamic::MODE_LINES, 0); // ObjectDynamic::MODE_POINTS, pod->setMaterialNodeType(Node::OBJECT_MESH_DYNAMIC); pod->setInstancing(0); pod->setVertexFormat(attributesParticle, 2); pod->addPoints(20); for (int i = 0; i < pod->getNumIndices(); i++) { // vec3 vertex = vec3(Game::get()->getRandomFloat(0.0f, 1.0f), Game::get()->getRandomFloat(0.0f, 1.0f), Game::get()->getRandomFloat(1.0f, 2.0f)); vec3 vertex = vec3((i % 2)*0.2, i%2, i*0.1); pod->addVertexFloat(0, vertex, 3); // vec2 xy = vec2(Game::get()->getRandomFloat(0.2f, 0.8f), Game::get()->getRandomFloat(0.0f, 3.1415)); vec2 xy = vec2(0.8, 3.14); pod->setVertexFloat(1, xy, 2); } pod->flushVertex(); BoundBox bb = BoundBox(vec3(-128, -128, 2), vec3(128, 128, 64)); pod->setBoundBox(bb); } fragment.shader geometry.shader MyParticles.basemat vertex.shader AppWorldLogic.cpp AppWorldLogic.h /Roberto

-

[SOLVED] supply the normal in World Space, not Tangent Space

roberto.voxelfarm posted a topic in Rendering

Hi, we are writing a custom deferred shader and we want to supply the normal in World Space, not Tangent Space. How can we achieve this? We do not want the value we supply in gbuffer.normal to be interpreted as if it was in Tangent Space. /roberto -

Hi: I had tried some access to the tessellation shader and it didn't work. My code had been uploaded so that you check out where the problem is, or can you offer some examples on how to develop tessellation shader in Unigine? thanks~ tessellation_test.rar

-

In order to access each mesh of in the ObjectMeshCluster, we are marking each mesh in the cluster with an ID. And the ID is passed into the shader by buffer or texture. In the shader, we use IN_INSTANCE to find the corresponding ID. But in the practice, we found the ID is changed by the visible count of the mesh. How can we get the right ID like using s_clutter_cluster_instances in the shader?

-

Hi, we have some cool effects such as Outline, Highlight, Border Detection... in post shaders that require auxiliary buffer. We now need combining these effects at the same time but we only have one auxiliary texture so we tried to set alpha channel like a kind of mask for each effect/object combination (like viewport mask). Shaders will check alpha channel of auxiliary buffer and if it does match their 'mask', they will make the effect using auxiliary RGB channels. When 'masks' fit the effect works fine, but when they do not, some artifacts can be seen. After investigating we realized that TAA is been applied on Auxiliary buffer and, consecuently, blending alpha channel with old auxiliary that is what makes this artifacts. Is there any way to make TAA not being applied on Auxiliary buffer? Is there any way to check old/current auxiliary buffer before applying TAA? Maybe any other tip to achieve this 'mask' behaviour? Thanks in advance, Javier [Mask not matching and TAA active. Orange is Outline effect and Green is Highlight effect] [Mask matching and TAA active]

-

I have some questions about the Unigin. 1) http://wacki.me/blog/2017/01/dynamic-snow-sand-shader-for-unity/ The content of this web page is a description of the features implemented in Unity3D. I need the same features for our project. So I would like to know Unigin 2.6.1 provides similar functionality. 2) And how can I use this features in teraain, if Unigin 2.6.1 includes this functions. 3) Finally, please let me know how to apply shader to teraain in Unigine.

-

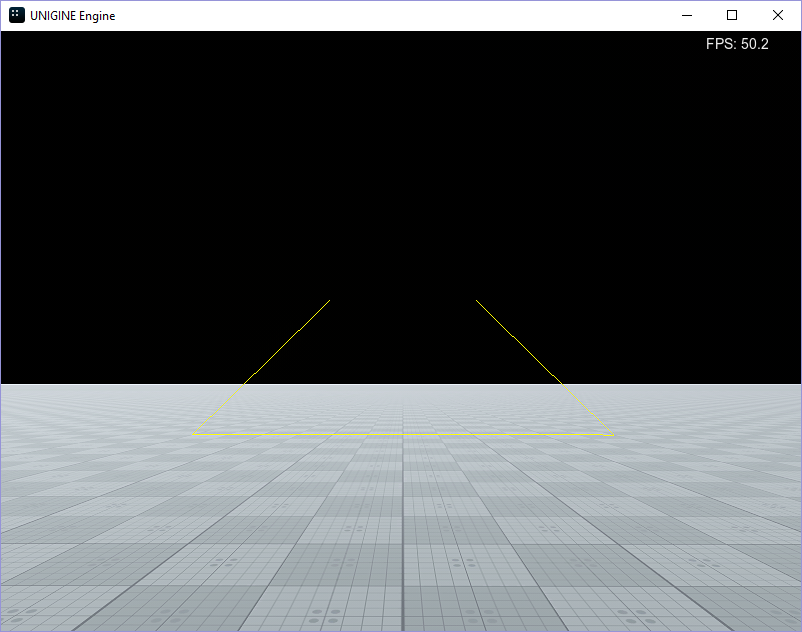

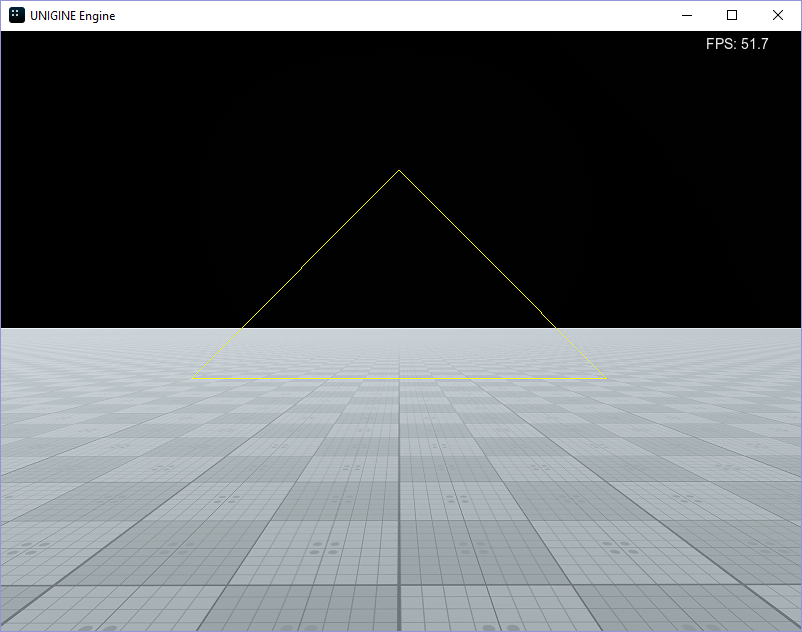

Hi ! I've got a problem with drawing ObjectDynamic in lines with simple shader. My goal is to connect several points in world with solid lines. The good practice for this issue is ObjectDynamic class. It can de drawn in lines. I've create a new empty project and made a couple chages (remove material ball and switch sun scaterring mode to "moon"). ObjectDynamic is constructed in AppWorldLogic. There are only 3 points added to ObjectDynamic to make a triangle. Surface mode is set to MODE_LINES. AppWorldLogic.h #include <UnigineObjects.h> using namespace Unigine; class AppWorldLogic : public Unigine::WorldLogic { public: ... private: ObjectDynamicPtr pOD; }; AppWorldLogic.cpp int AppWorldLogic::init() { pOD = ObjectDynamic::create (); const ObjectDynamic::Attribute attributes[] = { { 0, ObjectDynamic::TYPE_FLOAT, 3 } }; pOD->setVertexFormat ( attributes, 1 ); pOD->setSurfaceMode ( ObjectDynamic::MODE_LINES, 0 ); pOD->addLineStrip ( 4 ); pOD->addVertexFloat ( 0, Math::vec3 ( -2.0, 2.0, 1.0 ).get (), 3 ); pOD->addVertexFloat ( 0, Math::vec3 ( 2.0, 2.0, 1.0 ).get (), 3 ); pOD->addVertexFloat ( 0, Math::vec3 ( 0.0, 2.0, 3.0 ).get (), 3 ); pOD->addVertexFloat ( 0, Math::vec3 ( -2.0, 2.0, 1.0 ).get (), 3 ); pOD->setMaterial ( "lines", "*" ); pOD->flushVertex (); return 1; } "lines" is a basic material with very simple shaders: lines.mat <?xml version="1.0" encoding="utf-8"?> <base_material name="lines" version="2.0" parameters_prefix="m" editable="0"> <supported node="object_dynamic"/> <shader pass="deferred" node="object_dynamic" vertex="lines.vert" fragment="lines.frag"/> </base_material> lines.vert #include <core/shaders/common/common.h> STRUCT(VERTEX_IN) INIT_ATTRIBUTE(float4,0,POSITION) END STRUCT(VERTEX_OUT) INIT_POSITION END MAIN_BEGIN(VERTEX_OUT,VERTEX_IN) float4 row_0 = s_transform[0]; float4 row_1 = s_transform[1]; float4 row_2 = s_transform[2]; float4 in_vertex = float4(IN_ATTRIBUTE(0).xyz,1.0f); float4 position = mul4(row_0,row_1,row_2,in_vertex); OUT_POSITION = getPosition(position); MAIN_END lines.fraq #include <core/shaders/common/fragment.h> STRUCT(FRAGMENT_IN) INIT_POSITION END MAIN_BEGIN(FRAGMENT_OUT,FRAGMENT_IN) OUT_COLOR.r = 1.0f; OUT_COLOR.g = 1.0f; OUT_COLOR.b = 0.0f; OUT_COLOR.a = 0.0f; MAIN_END It works. The triangle is visible. But I've got a problem. Right after start the triangle is partialy visible. It looks like the triangle is clipped by some plane. This "cut-off line" is moving if I change the camera elevation. But if I had once change camera elevation totaly down the triangle becomes completely visible. I can't understand there is my mistake. Additional questions: 1) How can I increase lines width? Can it be done from fragment shader? 2) Can lines be drawing using some antialiasing?

- 5 replies

-

- objectdynamic

- shader

-

(and 1 more)

Tagged with:

-

How would I go about implementing a "flat shaded" mesh material? By flat shading, I mean the mesh should be subject to world lighting, however triangles should be rendered as if all pixel normals are set to the face normal (e.g. for a retro-graphics look with hard-edged triangles). The "brute force" method of achieving this is to duplicate all vertices in the mesh, such that no vertices are shared. Then the three normals for each triangle can be set to the face normal by taking the cross-product of two of the edges. This is not ideal however, since it results in redundant vertex data. How would I implement this using a material? I thought it might be possible to calculate per-vertex face normals using a geometry shader, but I can't get this to play nicely with mesh_base. Is there some sample material or shader code that does this? Many thanks

-

Hi, all. I am working on a VR/MR project. I had to replace scene image by another image shot by a camera. I was trying creating a custom shader for post-processing to do this. The image on the PC monitor is OK but unfortunately VR headset showed double/overlapped images. e.g. You can take the Post-processing UUSL example provided by Unigine. And add a texture assigned a local picture in the material and then output the RGB color of this texture to replace the scene image in the frag shader. Anyone knows the reason or solution? Please help me find out it. thanks!

-

Shader Toy has lots of great WebGL shaders. As a test, I ported this one https://www.shadertoy.com/view/llK3RR to UUSL. It's a barrel distortion with chromatic abberation. Lessons learned; Use shaders_reload Use EXPORT_SHADER(foobar) to dump out the preprocessed shader in to foobar.hlsl or foobar.glsl so you can match up the error line numbers Search+Replace of vec3 etc gets you surprisingly far. Valid GLSL things like foo = float3(0.0) break when using HLSL. Expand them as foo = float3(0.0, 0.0, 0.0) and they'll work for both Virtually all maths in ShaderToy code comes over without changes. I have attached the material XML, vertex and fragment shaders to this message. Note that if you're adding this to your project you'll need to edit the materials XML to correct the path to the shaders. post.frag shader_dev.mat post.vert

-

We've been experiencing a bug for a while where our tree billboards would flicker black in a really obnoxious way, that looked a lot like z-fighting. Recently though I've tracked it down and discovered that it isn't caused by z-fighting after all. We assumed it was so dark because it was caused by shadowed billboards being sorted incorrectly and showing up in front of other trees, but it's actually a problem with alpha testing not working properly. The first attached image shows this problem manifesting itself in a line. This is the point where the juniperoxy billboard intersects the willow billboard (the tree). You can see this in the second image where alpha test is turned off for the juniperoxy. Looking at the 4th image, you can see it's not just rendering black pixels there, it's actually rendering the transparent portion of the billboard image as opaque. For some reason when two billboard polys intersect in a certain way, it renders part of the image at 100% opaque alpha. In the short term, I've worked around this by painting a green tree pattern into the black portions of the tree billboards, and it's reduced the amount of flickering the user can see, however it's still very noticeable on groups of trees with some color variation. Is there a fix for this? We're on version 2.2.1, btw.

-

I am trying to create a custom vertex, geometry and fragment shader. I would like to pass in a float texture and an rgb color texture every frame but am running into some problems. I have tried updating a meshImage with new test texture data every frame before passing it off to the vertex shader. In my vertex shader, the output of the value returned in the texture will pass through the geometry shader and the fragment shader before rendering a color (following the meshLines sample). Seems like my texture ("depthTexture") in the vertex shader is never set when I use material->setImageTextureImage(id, meshImage, 1); The values I am getting back in the shader are all zero, when I call: float4 texValue = depthTexture.SampleLevel(s_sampler_0,IN.texcoord_0.xy,0.0f); I've attached the project I'm working out of and the accompanying material/shader files (D3D11AppQt.zip + data.zip). Here is the relevant code: Unigine::MaterialPtr material = dynamicMesh->getMaterial(0); Unigine::ImagePtr meshImage = Unigine::Image::create(); int width = 512; int height = 424; meshImage->create2D(width, height, Unigine::Image::FORMAT_RGBA8); id = material->findTexture("depthTexture"); if (id != -1) { int result = material->getImageTextureImage(id, meshImage); //int result = material->getProceduralTextureImage(id, meshImage); for (int y = 0; y < height; y++) { for (int x = 0; x < width; x++) { // random test values Unigine::Image::Pixel p; p.i.r = 255; p.i.g = 255; p.i.b = 255; p.i.a = 255; p.f.r = .75; p.f.g = .75; p.f.b = .75; p.f.a = .75; //p.f.r = .5; p.f.g = 5.0; p.f.b = .5; p.f.a = 1.0; meshImage->set2D(x, y, p); } } material->setImageTextureImage(id, meshImage, 1); //material->setProceduralTextureImage(id, meshImage); } My material (.mat file): <materials version="1.00" editable="0"> <!-- /* Mesh lines material */ --> <material name="lumenous_tron" editable="0"> <!-- options --> <options two_sided="1" cast_shadow="0" receive_shadow="0" cast_world_shadow="0" receive_world_shadow="0"/> <!-- ambient shaders --> <shader pass="ambient" object="mesh_dynamic" vertex="core/shaders/lumenous/lumenous_tron_vertex.shader" geometry="core/shaders/lumenous/lumenous_tron_geometry.shader" fragment="core/shaders/lumenous/lumenous_tron_fragment.shader"/> <texture name="depthTexture" anisotropy="1" pass="ambient"/> <parameter name="customVector" type="constant" shared="1">0.0 0.0 0.0 0.0</parameter> <parameter name="customMatrix" type="array" shared="1"/> </material> </materials> and vertex shader: Texture2D depthTexture : register(t0); /* */ VERTEX_OUT main(VERTEX_IN IN) { VERTEX_OUT OUT; float4 row_0 = s_transform[0]; float4 row_1 = s_transform[1]; float4 row_2 = s_transform[2]; float4 position = IN.position; OUT.position = mul4(row_0,row_1,row_2,position); float4 previous = float4(IN.texcoord_1.xyz,1.0f); OUT.texcoord_0 = float4(mul4(row_0,row_1,row_2,previous).xyz,IN.texcoord_1.w); OUT.texcoord_1 = IN.texcoord_2; float4 texValue = depthTexture.SampleLevel(s_sampler_0,IN.texcoord_0.xy,0.0f); //float4 texValue = depthTexture.Sample(s_sampler_0,IN.texcoord_0.xy); //float4 texValue = float4(.4, 0.0, 0.0, 1.0); if(texValue.x < .5) { OUT.texcoord_0 = float4(1.0, 0.0, 0.0, 1.0); OUT.texcoord_1 = float4(1.0, 0.0, 0.0, 1.0); } else { OUT.texcoord_0 = float4(0.0, 0.0, 1.0, 1.0); OUT.texcoord_1 = float4(0.0, 0.0, 1.0, 1.0); } return OUT; } Can you point me in the right direction? How do I pass in a texture to a vertex shader every frame and read it's values? Thanks! D3D11AppQt.zip data.zip

-

Hi, I was wondering if there are any examples of programmatically creating a shader, and more specifically, passing in a custom 4x4 matrix array to a shader? I see examples of vec4s throughout material examples, e.g. <parameter name="normal_transform" type="expression" shared="1">vec4(1.0f,1.0f,0.0f,time)</parameter> Are there any tips or examples that you can point me to do this? I have a 4x4 custom matrices (projection, rotation & translation) that I would like to pass into a shader that are generated only at runtime. Thanks!

-

[WONTFIX] Saturation/Desaturation for all materials

werner.poetzelberger posted a topic in Feedback for UNIGINE team

Hi, I think it would be quite handy to have a saturation/desaturation option for each material for the final appearance, between the actual color as it is rendered and a grey value. Maybe it as well could be extrapolated towards a higher saturation. Like (0<-1(as it is)->2). We had implemented this option for our shaders using our previous engine. It is very handy when it comes to bringing together many scene elements, as the composition of the final image is much easier and more pleasing. Best. Werner