Search the Community

Showing results for tags 'AI'.

-

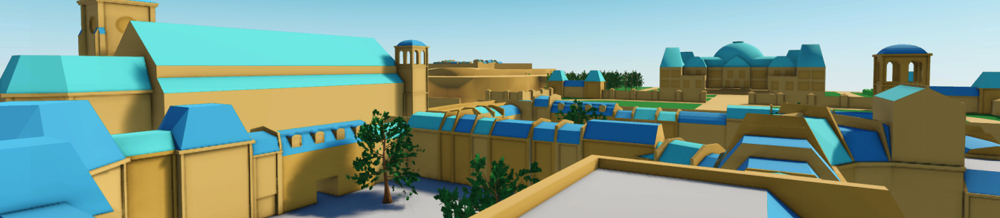

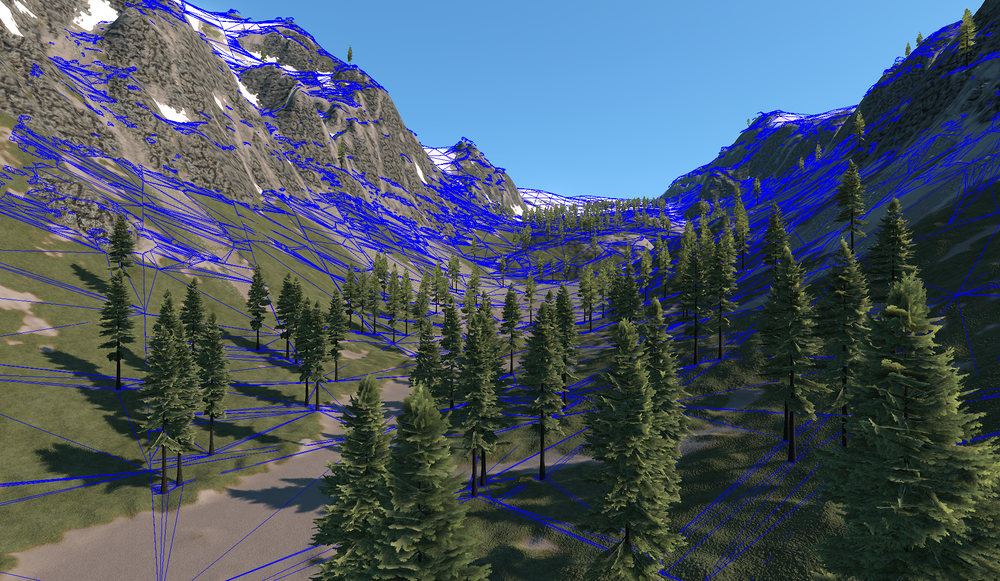

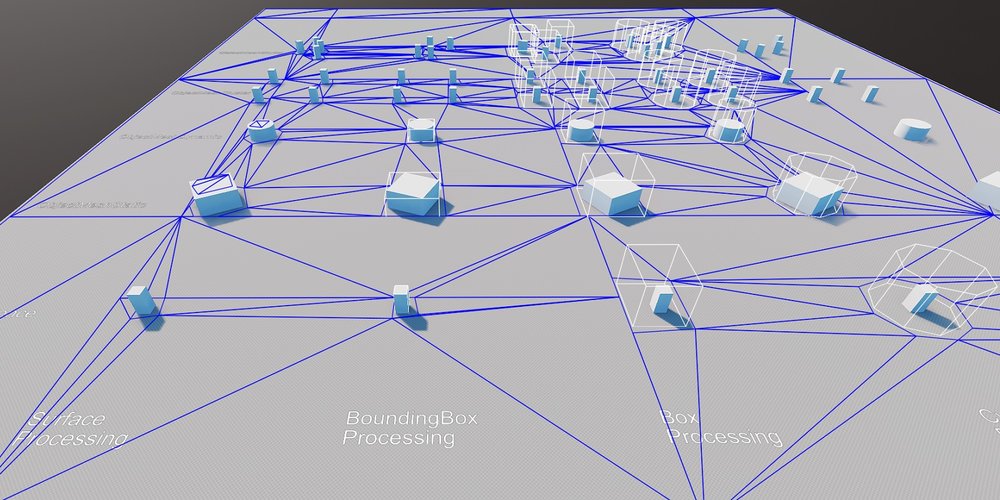

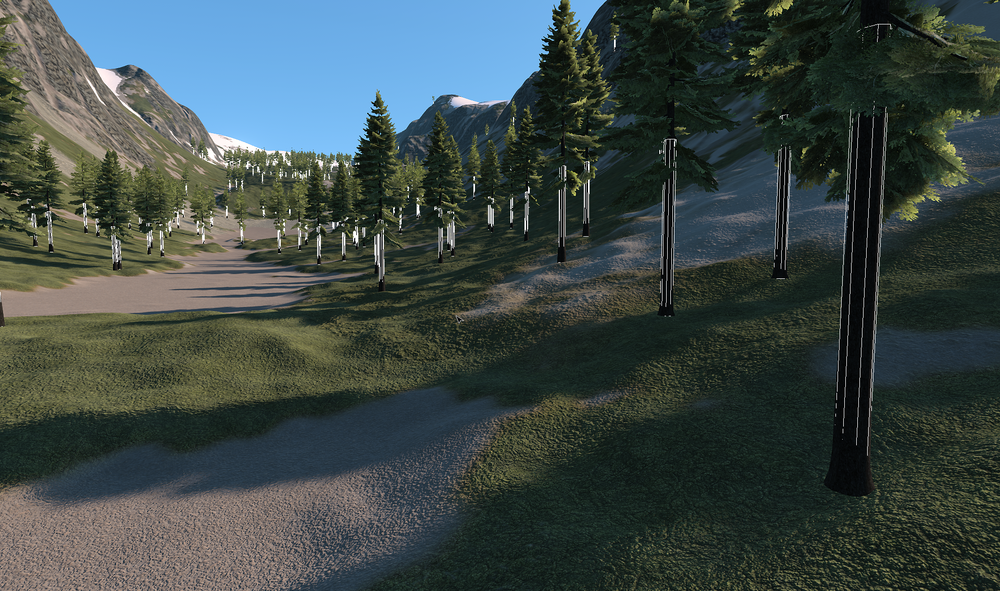

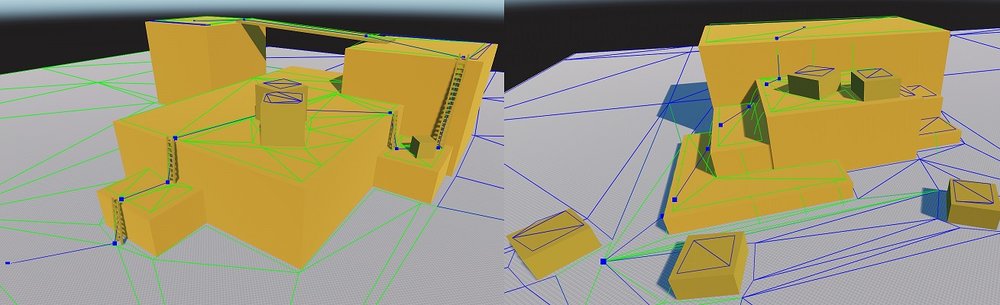

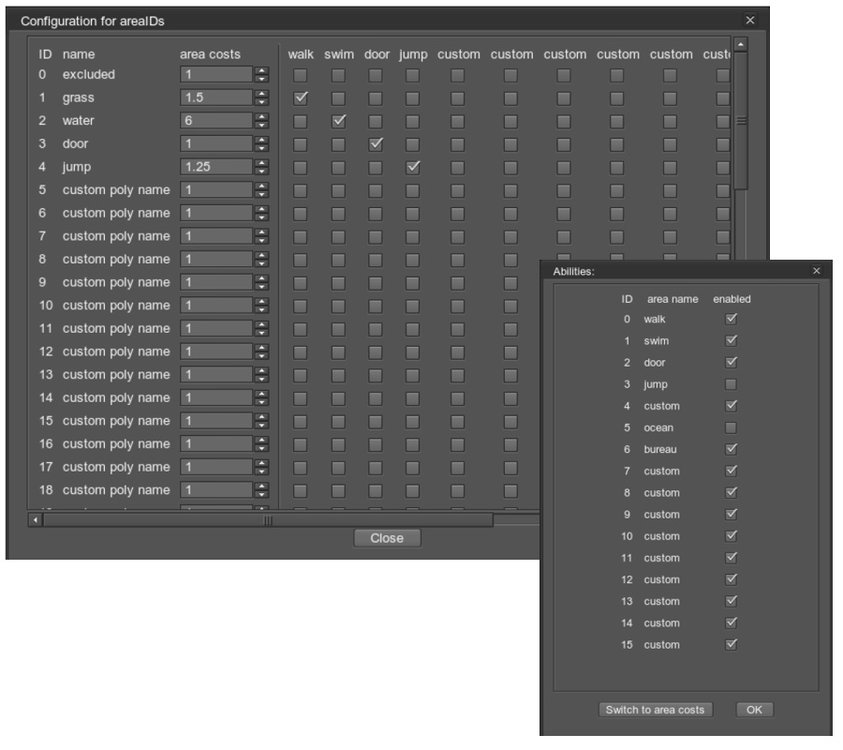

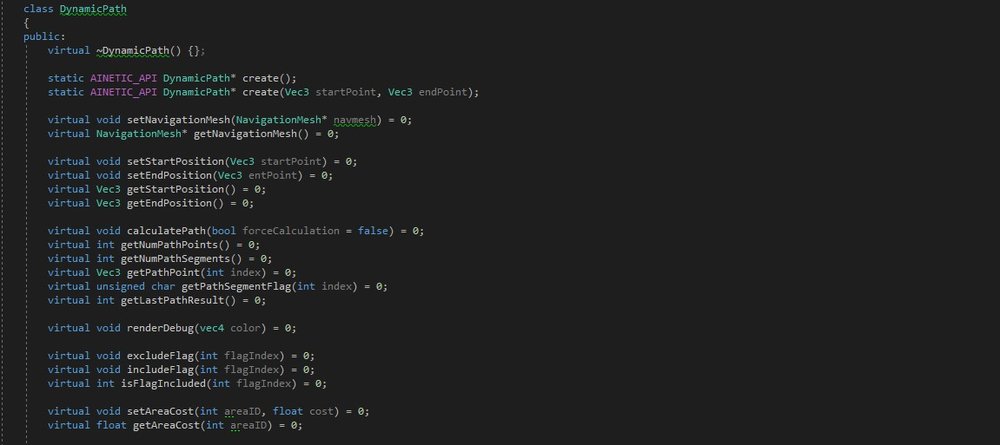

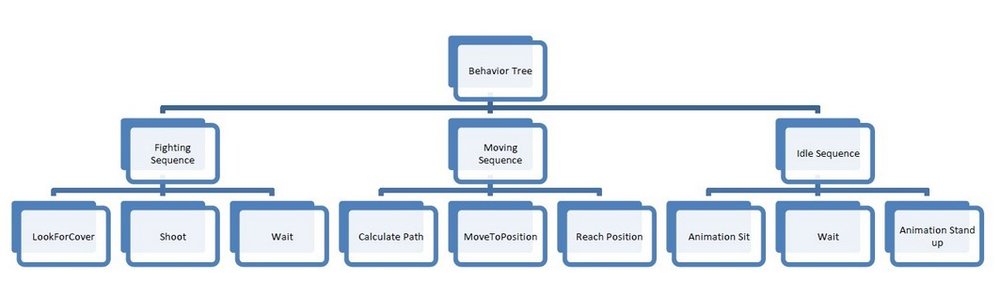

UNAIT – Unified AI Tool After my old post about a naïve and straightforward implementation of dynamic navigation meshes I got a lot of feedback and interest about such a plugin for UNIGINE. So, I decided to recreate my implementation from scratch and updated support for the recent UNIGINE engine versions (2.10+). On top of that I decided to develop a more complete package of various AI tools that are pretty common in the industry. Therefor I want to give you a detailed inside about my AI plugin – UNAIT which will be out at the beginning of next year! Main features This plugin covers (for now) four different sections of state-of-the-art AI topics in one bundle: Creation and modification of dynamic navigation meshes with full runtime support Dynamic pathfinding for all kinds of scenarios and obstacle support as well BehaviorTrees as a standard for lifelike behavior using complex but optimized decision trees Steering and Flocking Behavior for realistic movement for all kinds of agents as out-of-the-box solution. (If interested, there will already be plans for additional high-level behavior systems like a crowd movement, perception and awareness-tool.) Dynamic Navigation Meshes Overview This system is the most complex but also most powerful tool in this plugin. You can create at any time (during project setup or even at runtime) a dynamic navigation mesh (area where your agents can walk on) by adding/modifying/removing various input meshes that needs to be included into this process. The system was built as an easy-to-use-plugin, so the components will take care of dynamic growing/shrinking of your covered environment to balance best between memory usage and processing speed. At the moment, two different types of navigation meshes are implemented to fit your needs: Dynamic Navigation Meshes: Can be easily modified during runtime by adding/removing objects to it or change other general parameters (more infos further down the post). Best for any dynamic world such as RTS-games or destructive worlds. Static Navigation Meshes: Are a read-only variant that can be used as pre-generated navigation meshes for static worlds. However, while no runtime modification possible, it does fully support obstacles for simpler world changes. Building such a navigation mesh is fairly simple and requires only a couple of lines of code to work! The generation process is split into two separate sections: object processing and rasterization. By decoupling those sections, you can easily modify your supported world objects multiple times without affecting navmesh-recalculation or generation. When ready, just call the objects process()-function and all modifications will be flushed to the internal systems. Additionally, you can control every aspect of such navigation mesh generation, either at setup or at runtime. Change your agent’s size, height or radius? Does it have a limited step height or do they climb up a hill until a certain degree? No problem for this plugin, you have the full control over the whole generation process. Supported objects In order to fill your dynamic navigation mesh with data, there are a couple of UNIGINE-objects that are currently supported as input: Static Meshes: All kinds of static meshes with multiple surfaces. Dynamic Meshes: Same as static mesh, but with optimization regarding vertex transformation Landscape Layer Maps: works for all kinds of resolutions and with support for holes Object Mesh Cluster Object Mesh Clutters Node References: Full support for nested object setup for all above mentioned objects A built-in interface object (NavigationMeshObject) handles all those different types internally without much user interaction. Object processing can be done for example more frequent for dynamic meshes because of vertex transformation, while your static meshes needs to be considered only once. Worried about performance because the input meshes have a large number of triangles or your clutter objects consists of thousands of objects? On one hand, every different object is optimized internally to fit best: performance and memory. On the other hand, there is more to setup: Processing parameters For each object you can choose one out of five different process parameters to speed up rasterization and generation process even further: Whole mesh: Default and every single vertex of every single surface will be considered during object processing. While this can be slow, you can freely choose, which surface needs to be considered or can be ignored. For example, while only using LOD2- as object representation, you will save valuable performance and memory which can be spend elsewhere. Bounding Box: Use the object bounding box for an easier representation of the object. Box Model: A three-dimensional box with manual setup of length, width and height Cylinder Model: Setup for radius, height and number of sides. You will use this one most often for your forest clutter/cluster objects. External Mesh: Not satisfied if the above-mentioned shapes? Use one totally different mesh for the object rasterization process. Off-Mesh Connections and Convex Volumes To enable full support for your 3D-Environment, your agents need to climb up a ladder or jump down a cliff. Some areas might have different path costs and may have some restrictions (mentioned later). While those ones can not be easily represented by above mentioned objects, there are two additional built-in objects that will cover up those scenarios as well. Off-Mesh Connections: Allows you to define a virtual path between two anchor points that can be traversed without any object representation. Those virtual path can be setup either as uni- or bidirectional to allow for example jumping off a cliff only in one direction while your agent might be able to climb up/down a ladder in both directions. Convex Volumes: Those objects are the decals in the navigation mesh family. On top of a generated dynamic navigation mesh or even as an individual kind of object, this one will affect the area for your agents during path generation. Simply define the number of polygons and a height and you are ready to go! Area IDs, Abilities and path costs Not enough satisfied with the above-mentioned features? Then there is more! With Area IDs, surface flags (abilities) and path cost, you can tune your navigation mesh even more. Area IDs You can define for each surface of each NavigationMeshObject, every Off-Mesh Connection or every Convex Volume one of up to 64 different area IDs that needs to be taken into account. Those area IDs are necessary for surface flags (abilities) and path costs. Surface Flags (Abilities) In addition to define multiple area IDs, for each navigation mesh you can define up to 16 different surface flags. Those acts like abilities and your agent must have those ability to traverse an area of your choice. Setting up those ability is quite easy. First, define an area ID and enable one or multiple abilities that are needed, to move on those area. Second, use the build in path system to gain/remove an ability. Path costs: To tune even further the path searching algorithm, you can customize the costs for each area ID individually that are taken into account during the path exploration phase. Setup, which surfaces of any object (with an area ID attached to it) needs to be preferred over other ones. Those path costs work, of course, with surface flags and will bring you great flexibility during runtime. Dynamic Pathfinding The build-in pathfinding solution is optimized for any complex navigation mesh you may generate. You can easily define start and end point and let the system to anything else. Thanks to the asynchronous work, you do not need to wait for any immediately result but can be kept noticed when the calculation is finished. The DynamicPath-class will consider your setup areaIDs, path costs and needed abilities and are integrated flawlessly into the navigation mesh system. You can even go a step further and define for each DynamicPath their path costs for each areaID individually. Obstacles Temporary objects, that are blocking the entities path are called obstacles. Unlike NavMeshObjects, obstacles are a more lightweight concept because they serve as blocking units without further definition of costs or needed abilities. Therefore, obstacles will be taken into account for both, dynamic and static navigation meshes. Currently, there are three different types of obstacles: boxes, cylinders and oriented boxes. What else? Multi-threaded support The complete plugin is working in any multithreaded environment and have his own internal thread pool that uses and get align with UNIGINEs threading system. You can even easily define the number of cores the system will use to squeeze even the last CPU cycle out of your PC. The following sub-systems are multithreaded: Building your dynamic navigation mesh Processing NavigationMeshObjects and adding/removing them to the dynamic navigation meshes Working with Off-Mesh Connections and Convex Volumes Processing Obstacles Dynamic pathfinding Full double precision Every world oriented-function is build for both, single and double precision support. No floating-point and therefor “no” virtual restrictions applied when integrating this plugin into your UNIGINE project. Extended Debugging functionalities The plugin provides you essential debugging utilities during the creation of your project. From simple log-to-file functions for NavigationMeshObject setup, building the navigation mesh or during pathfinding up to visual helper tools during the complete runtime. Additional standardized functions For any built navigation mesh (static or dynamic one) you will have access to various functions that might be useful during your project. Looking for a random point on top of your navigation mesh? Do you need to do an intersection test with it? Well, no problem at all. We got you covered! Using UNIGINE-interface style In order to make your work as satisfied as possible, I applied the UNIGINE-styled code interface to the plugin as well. So, calling a create()-function will do all the work internally. Calling the objects destructor will clear all the internal used memory/address-related stuff so you stay always as safe as possible! Behavior Trees Behavior-Trees, or decision trees, are the industry standard for decision making and are used in quite every game nowadays. Behavior Trees represents the “brain” of an agent and stores the complete logic of it. They describe complex behaviors of your agents by splitting them into simple tasks, that will be called isolated. The simplicity allows even non-programmers to understand and modify large BTs easily. With this plugin you not only have access to those standard approach, but I extended it useful to ease working/handling Behavior Trees a bit. To implement your complex logic in a simple and meaningful way, the system is divided into multiple type of tasks, that will have different purposes during each call. BehaviorTree – Task: This task is your entry point for all of your logic and are also often called "Root Node". This task needs to be called each update frame to calculate your logic and only have one child which is called immediately. Leaf-Tasks: The leaf task runs the actual logic and is mainly overwritten by you. A leaf task may run over several update frames and have only three states; RUNNING, SUCCESS and FAILED. Selector/Sequence/Parallel-Tasks: Runs multiple child tasks on after another or multiple ones at the same time. Regarding the child tasks output (SUCCESS, FAILED), it might call additional child tasks. Decorator-Tasks: Have only one child and modifies the output of his child path. Guard-Tasks: Can be attached to each task and will be called once the attached task will be called. May prevent those tasks from running it. The complete Behavior Tree-Systems comes with a simplified Blackboard-approach, that is used as central storage for all necessary variables of your behavior tree. While you mostly will access those variables directly from your agents, this one is also a standard, so I implemented it. :) All-in-all the Behavior-Tree plugin will work flawlessly with the UNIGINE-Component System and can be called in any multi-threaded environment as well. You can even run one BehaviorTree for multiple agents to safe memory! Steering and Flocking Behavior When you have worked with artificial intelligence in games and simulations during the last years, you always have heard about Reynold’s flocking behavior at least once. Flocking Behavior is used when (small/medium) sized crowds needs to move together in a group but also avoid each other at the same time. Steering Behavior on the other hand is used as a standard technique for applying a life-like movement behavior to your agents during their virtual lifetime. Either moving alone along an virtual path, pursuit or evade other agents, follow a leader or queuing at an virtual bottleneck, you will need to use some kind of steering behavior. With this plugin, those two systems are combined into one and are working flawlessly with each other! The interface classes will be your starting point for your next AI while giving access to a couple of movement options and handles their virtual counterparts as well. Flocking Behavior: Alignment, Cohesion and avoidance are the three parts of every flocking movement in a virtual world. The system will move your agents automatically and calculate everything you need for a realistic crowd. I even optimized the standard system further, so the system is now as twice as fast as his counterpart. Steering-Behavior: Multiple movement behaviors are standardized for your virtual agents: Seek/Arrival and Wander for standard movement approach. Flee/Pursuit and Evade: For basic predator/hunting simulation FollowLeader: For small group movement Queueing: To move as fast and as realistic as possible through any virtual bottleneck As always, this system will also work in any multi-threaded environment and can be integrated into your UNIGINE-ComponenSystem-based environment. Final Notes Those systems will be ready to use at the beginning of next year. The main integration part is done and I will want to spend the next weeks on documentation, demos and basic examples. Next, I want to spend some time to implement the following features: Full UNIGINE-Editor support. Currently ALL systems are API-access only. The idea is, that at least the navigation mesh part will be fully operational in editor. Visual Behavior-Tree-implementation might be implemented as well. Linux-Support. Shouldn’t be such a big deal but take some time. C#-Wrapper: This will be a lot more complicated, but the C++-API should be migrated to C# as well. In case of any interest, feel free to contact me and I am more than happy to help you. I hope you will understand that this plugin will not be shipped for free. So just PM or mail me and we can find for sure an agreement. All the best Christian

- 12 replies

-

- 14

-

-

-

- ai

- navigationmesh

- (and 4 more)

-

Hello, I'm having a little problem with implementing AI which looks like a beginner issue but I'm not able to figure it out. I'm trying to create an animated agent AI that moves towards another static agent(ally). Both these agents, a ground plane and a Nav sector of size 1024 * 1024 * 9 have been created in the world. I've created a custom AI class with a Node type member variable initialized using findNode(). Once that is successful I'm going into route creation. This is what is done : Init() { route = new PathRoute(2.0f); route.setMaxAngle(0.5f); route.create2D(position, ally.position); } Update() { float ifps = engine.game.getIFps(); Vec3 dir = route.getPoint(1) - route.getPoint(0); if(length(dir) > EPSILON) { orientation = lerp(orientation, quat(setTo(Vec3_zero, dir, Vec3(0.0f, 0.0f, 1.0f))), iFps * 8.0f); position += self.getWorldDirection() * iFps * 16.0f; self.setWorldDirection(translate(position) * orientation); } route.renderVisualizer(vec4_one); route.create2D(position, ally.position); } Problem is with getPoint() call, the route is not being created and I'm getting route.getNumPoints() as 0. ERROR : "PathRoute::getPointNum(): bad point number". Have I missed something? Thanks in advance.